Java集合分析6

散列

[toc]

散列

一般想法

每个key都能完美的映射到表中.

散列函数

不存在完美的映射, 需要一个高效的函数 p = f(key). f称为散列函数. 通常, 关键字是一个字符串, 散列函数需要很好的选择

把字符串中字符的ASCII码或UniCode码的值加起来

//缺陷, 如果表很大, 函数将会不好分配, 例如key最多8个字符, 那么散列函数最多分配 127 * 8 = 1016 之间. public static int hash(String key, int tableSize) { int hashVal = 0; for(int i = 0; i < key.length; i ++) { hashVal += key.charAt(i); } return hashVal % tableSize; }一个稍微好的散列函数

public static int hash(String key, int tableSize) { int hashVal = 0; for(int i = 0; i < key.length; i ++) { hashVal = 37 + hashVal + key.charAt(i); } hashVal %= tableSize; //允许溢出 if(hashVal < 0) { hashVal += tableSize; } return hashVal; }Hornor 法则:有一些关于多项式求值的问题。对于多项式求值问题,我们最容易想到的算法是求出每一项的值然后把所求的值累加起来,这种算法的时间和空间效率都不高,对于数据规模不大的题目来说由于其直观、简单很容易被大家采纳,可一旦数据规模过大时,这种算法就显得无能为力了,下面介绍一种解决这类求值问题的高效算法――霍纳法则。在中国,霍纳法则也被称为秦九韶算法。

Java中HashCode的实现

/**

* Returns a hash code for this string. The hash code for a

* {@code String} object is computed as

* <blockquote><pre>

* s[0]*31^(n-1) + s[1]*31^(n-2) + ... + s[n-1] //多项式求和

* </pre></blockquote>

* using {@code int} arithmetic, where {@code s[i]} is the

* <i>i</i>th character of the string, {@code n} is the length of

* the string, and {@code ^} indicates exponentiation.

* (The hash value of the empty string is zero.)

*

* @return a hash code value for this object.

*/

public int hashCode() {

int h = hash;

if (h == 0 && value.length > 0) {

char val[] = value;

for (int i = 0; i < value.length; i++) {

h = 31 * h + val[i];

}

hash = h;

}

return h;

一个有趣的讨论

Why does Java’s hashCode() in String use 31 as a multiplier?

结论推测为早期的JVM优化31作为乘子可以优化 i* 31 = (i << 5) - i , 不过随着现代编译器的性能优化, 这个可能也不算是一个非常大的问题.

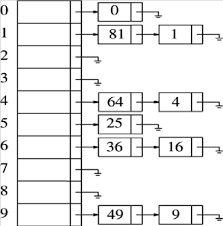

解决冲突: 分离链法

将散列到同一个值的所有元素保存到一个表中.

HashMap的源码解读

基本构成

public class HashMap<K,V> extends AbstractMap<K,V>

implements Map<K,V>, Cloneable, Serializable{}

//继承自AbstractMap实现了Map的接口

//节点 理论上是一个链表中的节点.

static class Node<K,V> implements Map.Entry<K,V> {

final int hash;

final K key;

V value;

Node<K,V> next; //冲突 桶中指向下一个节点.

Node(int hash, K key, V value, Node<K,V> next) {

this.hash = hash;

this.key = key;

this.value = value;

this.next = next;

}

public final K getKey() { return key; }

public final V getValue() { return value; }

public final String toString() { return key + "=" + value; }

public final int hashCode() {

return Objects.hashCode(key) ^ Objects.hashCode(value);

}

public final V setValue(V newValue) {

V oldValue = value;

value = newValue;

return oldValue;

}

/**

Key 和value 都相等才相等. (比较两个节点.)

*/

public final boolean equals(Object o) {

if (o == this)

return true;

if (o instanceof Map.Entry) {

Map.Entry<?,?> e = (Map.Entry<?,?>)o;

if (Objects.equals(key, e.getKey()) &&

Objects.equals(value, e.getValue()))

return true;

}

return false;

}

}

//TreeNode 待定 后续讲到Java8 将桶优化为红黑树再详细说明.

//定义的一些常量

/**

* The load factor for the hash table.

* 加载因子是表示Hsah表中元素的填满的程度.若:加载因子越大,填满的元素越多,好处是,空间利用率高了,但:冲突的机会加大了.反之,加载因子越小,填满的元 素越少,好处是:冲突的机会减小了,但:空间浪费多了.

* @serial

*/

final float loadFactor;

/**

* The next size value at which to resize (capacity * load factor).

*

* @serial

*/

// (The javadoc description is true upon serialization.

// Additionally, if the table array has not been allocated, this

// field holds the initial array capacity, or zero signifying

// DEFAULT_INITIAL_CAPACITY.)

int threshold;

//定义的一些常量

/**

* The default initial capacity - MUST be a power of two. 默认的初始化容量 必须是2的指数个

*/

static final int DEFAULT_INITIAL_CAPACITY = 1 << 4; // aka 16

/**

* The maximum capacity, used if a higher value is implicitly specified

* by either of the constructors with arguments.

* MUST be a power of two <= 1<<30. 最大容量

*/

static final int MAXIMUM_CAPACITY = 1 << 30;

/*

1 << 32 = 1

1 << 31 = -2147483648

1 << 30 = 1073741824

*/

/**

* The bin count threshold for using a tree rather than list for a

* bin. Bins are converted to trees when adding an element to a

* bin with at least this many nodes. The value must be greater

* than 2 and should be at least 8 to mesh with assumptions in

* tree removal about conversion back to plain bins upon

* shrinkage.

桶的形态发生改变的阈值. 链表向树形态变换的临界值

*/

static final int TREEIFY_THRESHOLD = 8;

/**

桶的形态发生改变的阈值. 树向链表形态变换的临界值

* The bin count threshold for untreeifying a (split) bin during a

* resize operation. Should be less than TREEIFY_THRESHOLD, and at

* most 6 to mesh with shrinkage detection under removal.

*/

static final int UNTREEIFY_THRESHOLD = 6;

/**

TODO explain

* The smallest table capacity for which bins may be treeified.

* (Otherwise the table is resized if too many nodes in a bin.)

* Should be at least 4 * TREEIFY_THRESHOLD to avoid conflicts

* between resizing and treeification thresholds.

*/

static final int MIN_TREEIFY_CAPACITY = 64;

/*

* Holds cached entrySet(). Note that AbstractMap fields are used

* for keySet() and values().

*/

transient Set<Map.Entry<K,V>> entrySet;

/**

HashMap的桶

* The table, initialized on first use, and resized as

* necessary. When allocated, length is always a power of two.

* (We also tolerate length zero in some operations to allow

* bootstrapping mechanics that are currently not needed.)

*/

transient Node<K,V>[] table;

/**

* The number of key-value mappings contained in this map.

*/

transient int size;

/**

* The number of times this HashMap has been structurally modified

* Structural modifications are those that change the number of mappings in

* the HashMap or otherwise modify its internal structure (e.g.,

* rehash). This field is used to make iterators on Collection-views of

* the HashMap fail-fast. (See ConcurrentModificationException).

*/

transient int modCount;

构造函数

/**

* Constructs an empty <tt>HashMap</tt> with the specified initial

* capacity and load factor.

*

* @param initialCapacity the initial capacity 2的指数次幂个.

* @param loadFactor the load factor

* @throws IllegalArgumentException if the initial capacity is negative

* or the load factor is nonpositive

*/

public HashMap(int initialCapacity, float loadFactor) {

if (initialCapacity < 0)

throw new IllegalArgumentException("Illegal initial capacity: " +

initialCapacity);

if (initialCapacity > MAXIMUM_CAPACITY)

initialCapacity = MAXIMUM_CAPACITY;

if (loadFactor <= 0 || Float.isNaN(loadFactor))

throw new IllegalArgumentException("Illegal load factor: " +

loadFactor);

this.loadFactor = loadFactor;

this.threshold = tableSizeFor(initialCapacity);

}

/**

* Constructs an empty <tt>HashMap</tt> with the specified initial

* capacity and the default load factor (0.75).

*

* @param initialCapacity the initial capacity.

* @throws IllegalArgumentException if the initial capacity is negative.

*/

public HashMap(int initialCapacity) {

this(initialCapacity, DEFAULT_LOAD_FACTOR); // 3/4 * 16 = 12个

}

public HashMap() {

this.loadFactor = DEFAULT_LOAD_FACTOR; // all other fields defaulted

}

public HashMap(Map<? extends K, ? extends V> m) {

this.loadFactor = DEFAULT_LOAD_FACTOR;

putMapEntries(m, false);

}

hash函数的原理解释, 可以参考hash函数的原理解读

/**

* Computes key.hashCode() and spreads (XORs) higher bits of hash

* to lower. Because the table uses power-of-two masking, sets of

* hashes that vary only in bits above the current mask will

* always collide. (Among known examples are sets of Float keys

* holding consecutive whole numbers in small tables.) So we

* apply a transform that spreads the impact of higher bits

* downward. There is a tradeoff between speed, utility, and

* quality of bit-spreading. Because many common sets of hashes

* are already reasonably distributed (so don't benefit from

* spreading), and because we use trees to handle large sets of

* collisions in bins, we just XOR some shifted bits in the

* cheapest possible way to reduce systematic lossage, as well as

* to incorporate impact of the highest bits that would otherwise

* never be used in index calculations because of table bounds.

*/

static final int hash(Object key) {

int h;

return (key == null) ? 0 : (h = key.hashCode()) ^ (h >>> 16);

}

工具

/**

* Returns a power of two size for the given target capacity.

此方法用与找到大于等于给定cap最接近的指数幂

*/

static final int tableSizeFor(int cap) {

/*

首先,为什么要对cap做减1操作。int n = cap - 1;

这是为了防止,cap已经是2的幂。如果cap已经是2的幂, 又没有执行这个减1操作,则执行完后面的几条无符号右移操作之后,返回的capacity将是这个cap的2倍。如果不懂,要看完后面的几个无符号右移之后再回来看看。

*/

int n = cap - 1;

n |= n >>> 1;

n |= n >>> 2;

n |= n >>> 4;

n |= n >>> 8;

n |= n >>> 16;

/*

注意,容量最大也就是32bit的正数,因此最后n |= n >>> 16; ,最多也就32个1(但是这已经是负数了。在执行tableSizeFor之前,对initialCapacity做了判断,如果大于MAXIMUM_CAPACITY(2 ^ 30),则取MAXIMUM_CAPACITY。如果等于MAXIMUM_CAPACITY(2 ^ 30),会执行移位操作。所以这里面的移位操作之后,最大30个1,不会大于等于MAXIMUM_CAPACITY。30个1,加1之后得2 ^ 30)

*/

return (n < 0) ? 1 : (n >= MAXIMUM_CAPACITY) ? MAXIMUM_CAPACITY : n + 1;

}

/**

* 初始化或者扩容两倍

* Initializes or doubles table size. If null, allocates in

* accord with initial capacity target held in field threshold.

* Otherwise, because we are using power-of-two expansion, the

* elements from each bin must either stay at same index, or move

* with a power of two offset in the new table.

*

* @return the table

*/

final Node<K,V>[] resize() {

Node<K,V>[] oldTab = table;

//old capacity

int oldCap = (oldTab == null) ? 0 : oldTab.length;

int oldThr = threshold;

//

int newCap, newThr = 0;

//如果旧容量大于0

if (oldCap > 0) {

//如果旧容量大于等于最大容量。

if (oldCap >= MAXIMUM_CAPACITY) {

//

threshold = Integer.MAX_VALUE;

return oldTab;

}

//如果(newCap = oldCap 的2倍)< 最大容量并且 oldCap >= 初始化容量

else if ((newCap = oldCap << 1) < MAXIMUM_CAPACITY &&

oldCap >= DEFAULT_INITIAL_CAPACITY)

newThr = oldThr << 1; // double threshold

}

else if (oldThr > 0) // initial capacity was placed in threshold

newCap = oldThr;

else { // zero initial threshold signifies using defaults

newCap = DEFAULT_INITIAL_CAPACITY;

newThr = (int)(DEFAULT_LOAD_FACTOR * DEFAULT_INITIAL_CAPACITY);

}

if (newThr == 0) {

float ft = (float)newCap * loadFactor;

newThr = (newCap < MAXIMUM_CAPACITY && ft < (float)MAXIMUM_CAPACITY ?

(int)ft : Integer.MAX_VALUE);

}

threshold = newThr;

@SuppressWarnings({"rawtypes","unchecked"})

Node<K,V>[] newTab = (Node<K,V>[])new Node[newCap];

table = newTab;

if (oldTab != null) {

//将oldTab赋值给newTab

for (int j = 0; j < oldCap; ++j) {

Node<K,V> e;

if ((e = oldTab[j]) != null) {

oldTab[j] = null;

if (e.next == null)

newTab[e.hash & (newCap - 1)] = e;

else if (e instanceof TreeNode)

// 将红黑树拆分成链表.

((TreeNode<K,V>)e).split(this, newTab, j, oldCap);

else { // preserve order

Node<K,V> loHead = null, loTail = null;

Node<K,V> hiHead = null, hiTail = null;

Node<K,V> next;

do {

next = e.next;

if ((e.hash & oldCap) == 0) {

if (loTail == null)

loHead = e;

else

loTail.next = e;

loTail = e;

}

else {

if (hiTail == null)

hiHead = e;

else

hiTail.next = e;

hiTail = e;

}

} while ((e = next) != null);

if (loTail != null) {

loTail.next = null;

newTab[j] = loHead;

}

if (hiTail != null) {

hiTail.next = null;

newTab[j + oldCap] = hiHead;

}

}

}

}

}

return newTab;

}

增

/**

* Associates the specified value with the specified key in this map.

* If the map previously contained a mapping for the key, the old

* value is replaced.

*

* @param key key with which the specified value is to be associated

* @param value value to be associated with the specified key

* @return the previous value associated with <tt>key</tt>, or

* <tt>null</tt> if there was no mapping for <tt>key</tt>.

* (A <tt>null</tt> return can also indicate that the map

* previously associated <tt>null</tt> with <tt>key</tt>.)

*/

public V put(K key, V value) {

return putVal(hash(key), key, value, false, true);

}

/**

* Implements Map.put and related methods

*

* @param hash hash for key

* @param key the key

* @param value the value to put

* @param onlyIfAbsent if true, don't change existing value

* @param evict if false, the table is in creation mode.

* @return previous value, or null if none

*/

final V putVal(int hash, K key, V value, boolean onlyIfAbsent, boolean evict) {

//桶, 这个就是存储元素的桶 = table (field of class)

Node<K,V>[] tab;

Node<K,V> p;

int n, i;

//如果

if ((tab = table) == null || (n = tab.length) == 0)

n = (tab = resize()).length;

if ((p = tab[i = (n - 1) & hash]) == null)

tab[i] = newNode(hash, key, value, null);

else {

Node<K,V> e; K k;

if (p.hash == hash &&

((k = p.key) == key || (key != null && key.equals(k))))

e = p;

else if (p instanceof TreeNode)

e = ((TreeNode<K,V>)p).putTreeVal(this, tab, hash, key, value);

else {

for (int binCount = 0; ; ++binCount) {

if ((e = p.next) == null) {

p.next = newNode(hash, key, value, null);

if (binCount >= TREEIFY_THRESHOLD - 1) // -1 for 1st

treeifyBin(tab, hash);

break;

}

if (e.hash == hash &&

((k = e.key) == key || (key != null && key.equals(k))))

break;

p = e;

}

}

if (e != null) { // existing mapping for key

V oldValue = e.value;

if (!onlyIfAbsent || oldValue == null)

e.value = value;

afterNodeAccess(e);

return oldValue;

}

}

++modCount;

if (++size > threshold)

resize();

afterNodeInsertion(evict);

return null;

}